Isn’t it fun creating your own ML model without having expertise in pre-processing of data, feature extration, dimensionality reduction, hyperparameter optimization…….??

But, what is AutoML????

Automated Machine Learning involves automating the whole process from the preparation of data to the prediction of real-world data. Basically end-to-end process of applying machine learning to real-world problems in generating ML models. Several steps are to be followed prior to selecting a model for a dataset such as data pre-processing, feature engineering, feature extraction, and feature selection. In order to increase the predictive performance, hyperparameter tuning must be performed too. Here, AutoML simplifies the whole application of machine learning especially for a greenhorn in ML.

What is PyCaret?

An open-source low-code ML library that helps the user from preparing the data to deploying a model within a few lines of code allowing to reach conclusions faster reducing the cycle time from hypothesis to insights. PyCaret is a wrapper around other ML libraries providing a low-code solution.

Installation

pip install pycaret

# To upgrade an existing version

pip install --upgrade pycaret

# To create a conda environment using anaconda prompt

conda create --name envname python=3.6

conda activate envname

pip install pycaret

All the dependencies will be installed with pycaret. To view all the dependencies Click Here.

Importing required module based on the task. There are 6 available modules in pycaret, if the regression module has been imported, then the environment is set up to perform regression task only.

from pycaret.classification import * # Classification model

from pycaret.regression import * # Regression model

from pycaret.clustering import * # Clustering

from pycaret.anomaly import * # Anomaly Detection

from pycaret.nlp import * # NLP

from pycaret.arules import * # Association Rule Mining

Setup Function

Setup is the initial and mandatory step before starting off to build a model. There are few default pre-processing tasks performed by default, in addition pycaret provides other pre-processing features to increase the performance.

Syntax for setup

_setup= setup(data,target=' ') # data: dataset

Default pre-processing tasks

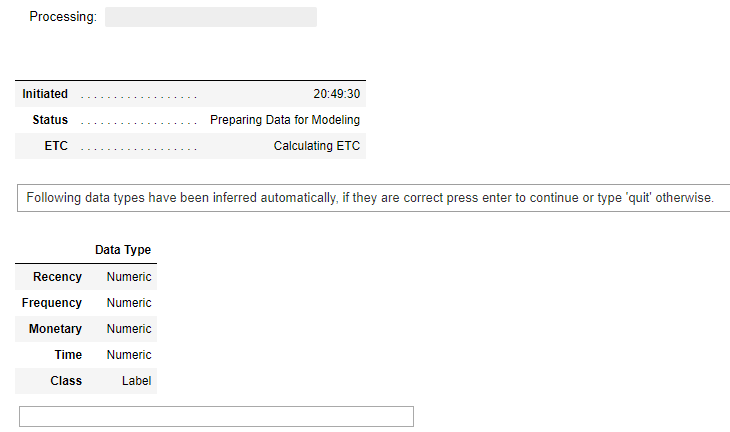

Data Type Specification

It helps in determining the data types for the features in the dataset. After the execution of the setup, a dialogue appears displaying all the features corresponding to their data type. If the user finds the data to be accurate, the user can continue by pressing “Enter” to continue or type “quit”, if there are any discrepancies between the data type and feature.

The above figure represents features of blood dataset with data types.

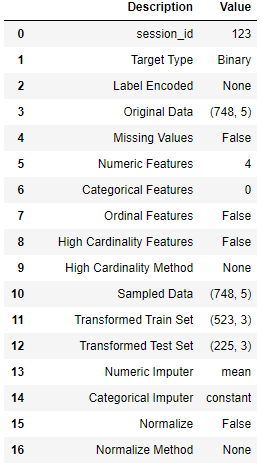

Data Cleaning

Sometimes, users tend to forget cleaning the data i.e. finding the missing values and filling it up but, setup solves this by identifying the missing values. If it’s a numerical feature, the missing value may be filled up with mean or median. For categorical features, the missing value will be filled up with the most frequent value. numeric_imputation and categorical_imputation are the parameters used.

Data Splitting

By default, data is split into training and testing data in the ratio 70:30.

For better understanding let’s consider an example:

import pycaret

from pycaret.datasets import get_data

dataset = get_data('blood')

The same blood dataset is taken for the example too.

from pycaret.classification import *

classify=setup(data=dataset,target ='Class')

The total size of the dataset is (748,5), by default this data is split in the ratio 70:30. The target type is specified as binary, and consisting of only numerical features. Only a few of the default features have been discussed, let’s jump and know in detail about the other important pre-processing steps.

Normalization

This is an important technique applied to change the numerical values to use a similar scale, without distorting the differences and losing information. By default, ‘zscore’ is used in Pycaret.

normalize_method: string, default = ‘zscore’